Ruth Hennell06 Jun 2024

All human beings have limitations when it comes to accessing nature. We can’t crawl in soil like a tiny worm, enter small habitats or fly in the trees like birds.

"As a disabled and chronically-ill person, I’ve found going slow and lying down due to increased pain and reduced mobility gave me a ‘snail’s eye’ view and meant I noticed and appreciated the messy, imperfect reality of nature more" says Ruth Hennell, a disabled inter-disciplinary designer and part-time OU student, from Bristol.

Accessibility barriers, coupled with fatigue, pain, broken lifts, poor transport, and adverse weather conditions can make it challenging to get outside. In fact, DEFRA found 31% of reasons for not visiting natural environments were related to age and health. Sport England reported disabled people are more often engage in activities within 10 miles of home.

Ruth has a unique perspective due to her heightened awareness of human limits and deep connection with her local neighbourhood, which she accesses, experiences and appreciates differently. She uses this to gain fresh insights which can help re-enchant us with our environments, playing with the boundaries of human perception.

"Those who have lived with pain for years have taught me so much" says Ruth. She draws on disability wisdom from writers like Louise Kenward, editor of the Moving Mountains anthology and from the conversations and support of others in her work.

This spring, Ruth joined Friends of the Earth’s recent design Lab to explore a question:

Can AI and sensor technology allow us to experience and empathise with the viewpoints of other beings?

Ruth says, ‘I often use technology to help me access nature, like many of my disabled and chronically-ill friends and family, saving and sharing photos, creating dancing plant GIFS, videos, and virtual walks to access nature at home.’

"Last autumn I was stuck in my flat for 3 weeks, and joked I would love a Snail Robot to remotely access the allotments where our Garden Lab project in Knowle West, Bristol took place. We built a simple prototype Snail Bot with a small camera, cardboard on a remote control car".

The AI opportunity

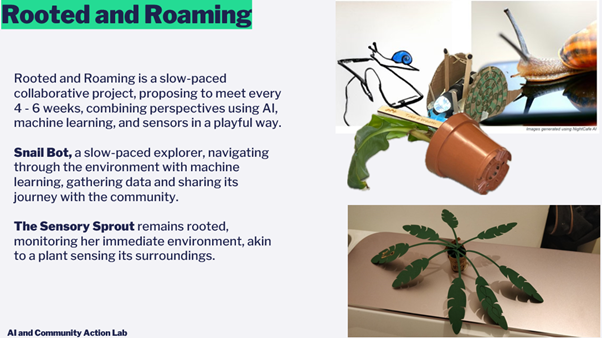

Through the Lab, Ruth has been taking this idea further with the use of Generative AI. Developing early stage prototypes for two bots, designed to be placed in areas of Bristol that are usually inaccessible to humans. One bot is rooted like a plant, and the other roams like a small animal. Both bots sense their environment and are linked to Large Language Models trained on data about the plant or animal they mimic. They share their experiences of environmental conditions with human collaborators.

Snail Bot is a slow-paced explorer, navigating through the environment with machine learning, not unlike a robot vacuum cleaner, guided rather than controlled, gathering data and sharing its journey with the community.

Alongside it the Sensory Sprout remains rooted, sensing things like pressure, warmth, light, wind and smell, akin to a plant sensing its surroundings. It wants to help humans connect those conditions with how they affect plants and humans. Both seek to move beyond traditional ideas of access and numerical data, to perceive and experience the local environment in new ways.

Imagine…

Jake, an artist with a chronic illness, learns about Sensory Sprout from a friend. The Sensory Sprout's ability to monitor above and below-ground environmental changes can help Jake understand how local conditions may affect his health. He follows the Sensory Sprout on X where he enquires about local climate conditions. The Sensory Sprout's microcontroller processes Jake's queries, controlling sensors for wind and smell. An animated GIF response from the Sensory Sprout, stemming from Jake's inquiries, serves as inspiration for his artwork.

The bots safely explore new spaces locally, without causing harm, to draw upon the diverse ways different living beings experience and perceive the world, to help both disabled and non-disabled people access and experience natural spaces in ways they wouldn't usually be able to, finding new relationships, ways of being and inspiration.

There are ethical challenges to navigate

There were mixed responses to AI voices of the bots. While some enjoyed them, others expressed concern about anthropomorphism. The felt that plants and animals have their own way of communicating and seeing the world. To give it a voice would be to try and make them more human. If we want to use AI to understand how a snail sees the world, adding a human voice would be going in the wrong direction.

Training Large Language Models (LLMs) on inherently limited human data may open the door for us to perceive in different ways but falls short of sharing the experience of another being.

More testing is needed to see if the bots will increase empathy and creativity. Ruth is concerned that the project could unintentionally reinforce disability simulation practices, which have been criticised for failing to communicate complex, varied lived experiences of disabled people, or act as a 'Disability Dongle'- a technological solution that overlooks more direct ways of resolving accessibility barriers.

She says "Co-creating with other disabled and chronically-ill people is so key to purposeful development of the emerging ideas and to explore the intersection of AI with accessibility" .

Considering environmental impact

Ruth envisages a grassroots approach to technology in the project. "I think a lot of people assume AI is simply web-based Chat GPT. It creates a lot of, assumptions and also perhaps centres more extractive or less secure approaches". This is really hard to navigate- but Ruth is exploring some options including:

- Hosting smaller language learning models locally . This reduces dependence on cloud based generation from energy intensive data centres.

- Combining AI with a simple database of quotes from books, real life images and GIFs, which allow the bots to respond to users without the need to generate new content using AI.

- Saving and reusing any AI generated content which is produced.

"With this project I think it’s about finding the sweet spot of where and where not to use AI and not using it where there are more sustainable options" says Ruth. Simple sensor technology may not call for AI use, for instance.

Would you like to get involved?

To develop Snail Bot and Sensory Sprout further, Ruth wants to bring together a group of collaborators in a community called Rooted and Roaming. She would like to hold some snails-paced co-creation sessions to play with secondhand robotics kits and explore the possibilities of Large Language Models (LLMs).

Imagine a co-creation group that meets for three hours each month, dedicated to co-designing and experimenting together. Rooted and Roaming will take a slow, steady, and inclusive approach, resulting in a project accessible to Ruth and her collaborators and challenging the accelerant approach of mainstream AI.

Would you like to join Ruth on a slow and steady journey of growth for Snail Bot and Sensory Sprout? Contact her at [email protected].