Mary Stevens23 Aug 2023

Does the arrival and super-fast development of generative AI technologies risk entrenching inequality and undermine efforts to tackle the climate and biodiversity crises?

This is a question that deeply concerns Friends of the Earth - our strategy warns of the risk of epistemic chaos, if society does not share a coherent enough knowledge base to work towards a common good and if our movement fails to influence the design and application of generative AI. Divisive politics, the existence of deep fakes and the potential collapse of trust in what is true have huge implications for effective engagement with climate solutions and our ability to motivate people to take action.

Empowered to explore these big existential questions, our Experiments Team recently hosted an internal ‘design lab’ to identify leverage points, where a small intervention could be taken up and amplified to create significant change. We aimed to come up with a number of proposals for interventions that could help us probe the system and learn how it might respond.

This is an overview of what we did, the conclusions we drew and what we plan to do next. In the lab we identified three key areas where we think we can have an impact. We will cover each of these themes in more detail in a follow up article. We don’t have the resources to pursue all of these themes ourselves, so we hope these articles will help us gauge wider interest and understand where the energy for action is in our network.

If you would like to explore any of these topics with us please get in touch at [email protected]. We know that we can’t do any of this work alone.

Our approach

We gathered a team of seven colleagues from a range of different areas (fundraising, digital activism, IT support, campaigns). We also invited an eighth participant, code name NAIgel, a persona generated by ChatGPT, intended to act as an expert in responsible technology design, who would respond to the same prompts and challenges as our human collaborators. We then designed a series of 3-hour online workshops to extend over a 6-week period, broadly based on the Acumen Systems Practice course. We used a Miro world (explore it here) to host our conversations and to create a space that people could dive in and out of with their ideas when it suited them (drawing on Mandy Holden’s awesome design skills, and her training with Mona Ebdrup).

We structured the sessions like this:

- Week 1: Orientation and introductions. Connecting with each other, introduction to systems thinking and practice, reviewing the problem definition.

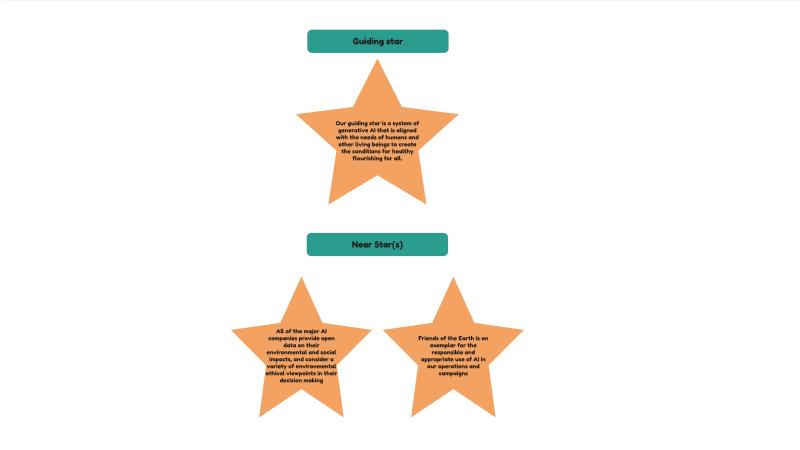

- Week 2: Stepping into the future. Using scenarios and the ‘three horizons’ model to envisage possible and desirable futures. Defining our guiding and near stars.

- Week 3: Mapping the system. Understanding the forces at work (‘enablers’ and ‘inhibitors’). Thinking about leverage points

- Week 4: Strategy and idea generation. What are our ‘best guess’ solutions for what we could do?

- Week 5: Idea development – working up our preferred solutions as proposals, and presenting to a wider audience.

- Week 6: Learning and review.

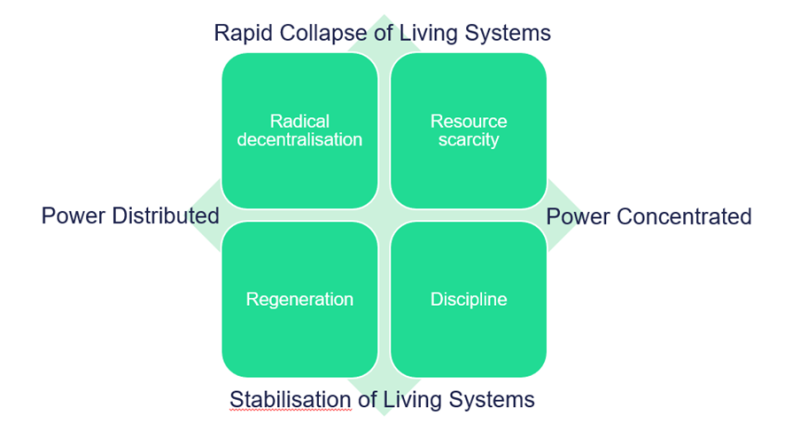

In advance we also circulated some curated reading, to ensure a base-level of shared understanding (the AI Dilemma was core listening). In thinking about scenarios we used a matrix with the concentration of power on one axis and the future of living systems on the other (with a spectrum between cascading collapse and stabilisation). Our key learnings from this process are set out below.

Developing a common vision for a guiding and near stars was difficult, particularly agreeing the near stars in advance of completing the enquiry: how can we know what is both feasible and desirable? Although the focus of the work was intended to be external and strategic we also realised that in order to exercise influence we too need to be experienced with using the tools, and to be doing this in a way that exemplifies the practice we want to see. As a consequence we developed two ‘near’ stars, one focused on a campaigning goal and the other on tactical adoption within our organisation. There is a tension between developing stars – which feel akin to traditional campaign goals – and the wisdom of a systems approach that prioritises paying attention to the health of the system over outcomes or solutions.

Three themes

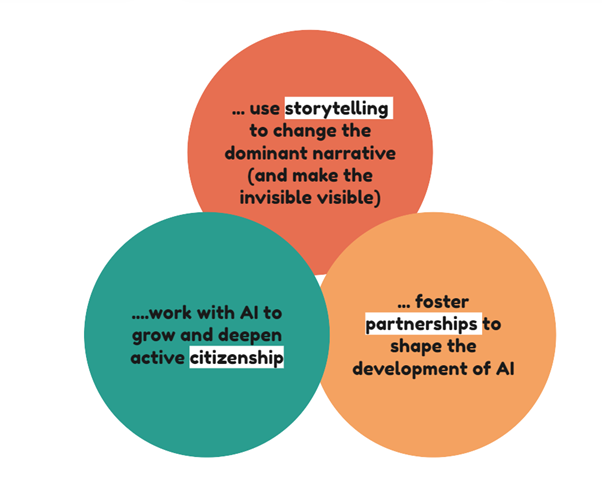

Three key inter-related themes emerged from the sense-making phase of our thinking as the most promising areas for intervention. These are the areas where we would like to develop further experiments. Look out for our subsequent blogs.

- Working with AI to grow and deepen active citizenship.

- Fostering partnerships to shape the development of AI technologies (targeted influencing strategies).

- Using storytelling to change the dominant narratives, and ‘make the invisible visible’ (from the hidden labour, to the resource demands, to the in-built bias).

What we learned

Freeing the imagination is difficult on line . A combination of Miro world plus walk-and-talk sessions worked well for hybrid team building. But it is still really hard to create the space for the imagination to flourish in a collaborative space that is predominantly online.

Not everyone started from the same place. There was a huge amount of content to explore and absorb. Could we use subject-expert mentors to support participants to explore the content in the future, and also to prepare more provocative stimulus? (Like our friends at Huddlecraft did for this recent Web3 exploration). Would a baseline survey about the participants knowledge and needs have helped here?

Confronting in-built bias. It was hard for us to centre an anti-racist perspective, in an internal (colleagues only) lab, that did not include racially marginalised people. How do we put the experience of algorithmic injustice at the heart of our thinking moving forward?

The process. There were lessons here too: it was great to include an AI participant, but we needed someone dedicated to ‘driving’ it. Some of the systems tools are best suited to existing problems – the issues where there've been multiple unsuccessful attempts to ‘solve’ something and are well known to all the participants. They work less well for speculative forward thinking, and for complex problems that are emerging, but not fully here yet. Focusing on the three horizons model (we used this excellent intro video from Kate Raworth), and getting deep into this might deliver more depthinsight, and be clearer for participants who are not so familiar with these models.

What we’ll do next

Our next step is to gather feedback on our themes and decide where there is’s the most potential. We will be extending this work in the autumn, looking to use rapid prototyping to turn emerging ideas into fundable propositions. We’re also very keen to contribute to coalitions of civil society actors looking to influence this space.

Please get in touch at [email protected] if you have ideas!