Mary Stevens05 Mar 2025

On February 10th we published our principles and practice on AI, co-authored with We Are Open and generously funded by the Green Screen Catalyst Fund. We are thrilled by the response so far and are delighted to have already had opportunities to present it to a number of audiences, from a gathering of UK funders (collectively representing over £4bn of assets) gathered by CAST and the National Lottery, to other international NGOs via the Innovation for Impact Network (and alongside the Centre for Humane Technology, whom we’ve long admired).

This looks brilliant – a substantive and well thought through piece of work that is sorely needed by the sector. Sarah Watson, Head of Innovation, National Lottery Community Fund

It’s important for us to share our work in public. Audiences suggest new angles that we may not have considered. And questions arise - so we thought it helpful to set out what we’ve learned so far and what we think the next steps could be. Please do join us on this journey to reflect on the urgency of greening AI development and how together we can move this important agenda forward.

3 things we’ve learned

Some of the principles are easier to bring to life than others.

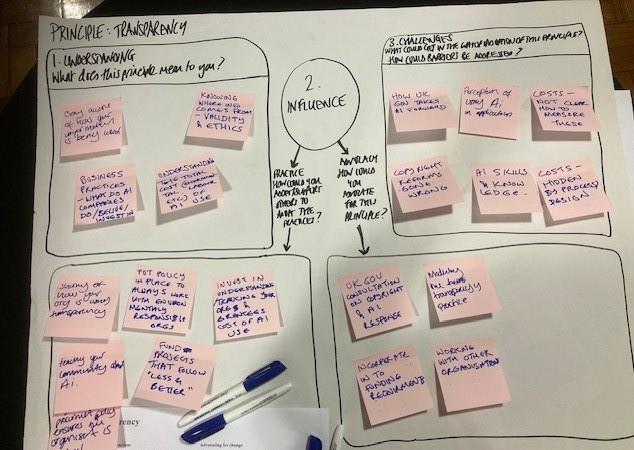

Most audiences can quickly grasp the value of mindful curiosity or transparency, but are struggling more with inclusion or intersectionality. What might this mean in practice? We don’t have all the answers – the paper in many cases is more of a provocation and a set of questions than a pre-packaged set of solutions – but we recognise the need to make this clearer.

Connected by Data’s work on public engagement has been cited to us a place where these questions are being explored: check out their unconference on 20th March if you want to dive in deeper.

I’ve also observed that the principles and practices could be more succinct, as there’s definitely some repetition in the recommendations. We could work towards version 2.0 if there’s sufficient interest.

The challenge of measurement.

But how bad are the impacts of AI development? And how do I know? Given that in many civil society organisations AI strategy is largely the responsibility of digital leads (despite the fact that, as David Knott, CEO of the National Lottery Community Fund put it in his opening remarks to the funder conference, this is first and foremost a social issue), it’s not surprising that many people are looking for metrics. How do I measure the Scope 3 emissions? But the point is that you can’t, because Big Tech isn’t anywhere near providing the data you’d need to do this. This is why advocacy is so important – we need to be demanding more transparency. Meanwhile, it’s encouraging to see the development of initiatives like the Technology Carbon Standard, with responsible tech companies stepping up to show what’s possible (and open-sourcing it).

The advocacy gap.

Lots of people, in lots of types of organisations, from tech companies (yes really!) or at least the ones I meet at Green Tech South West), to civil society organisations and SMEs feel deeply alarmed about the direction this Government is taking on AI. It was very disappointing that at the end of the summit in Paris the UK Government chose not to sign the international declaration on ‘open, inclusive, transparent, ethical and safe’ AI, firmly aligning us instead with the US bonfire of regulation. Most of these organisations are not natural campaigners – but they’d happily swing behind a good, well-coordinated movement for AI within planetary boundaries. It’s great to see Foxglove (with Rights Community Action and Global Action Plan) getting involved at the sharp edge of data centre permissions but if funders want to see a movement develop around the AI-democracy-climate nexus they are going to have to step up and resource it.

What next – and where do you fit in?

We’d love to do more to bring the principles to life. Do you have a story to share about how you or your organisation is exploring curiosity, transparency, accountability, inclusion, sustainability, community or intersectionality. (Read the report to check out how we understand these)? If so we’d love to feature you on this blog.

Are you part of a community of practice that’s looking to explore AI applications, and thinking through the ethics? Like this one, for example? We’ve created a discussion template you could use based on our report. And if you’re not, maybe you could start one? Let us know how you get on.

Finally, this is a static document, but the world of AI won’t stand still. At the same time, there are other people who are much better informed about developing possibilities and trends than us. Maybe the guidance could transition to a co-owned, co-curated model, over time? Is this something you’ve seen work elsewhere? We’d love to hear from you.

And in the interests of transparency, this article was written without AI assistance.